MotionSymphony插件报告

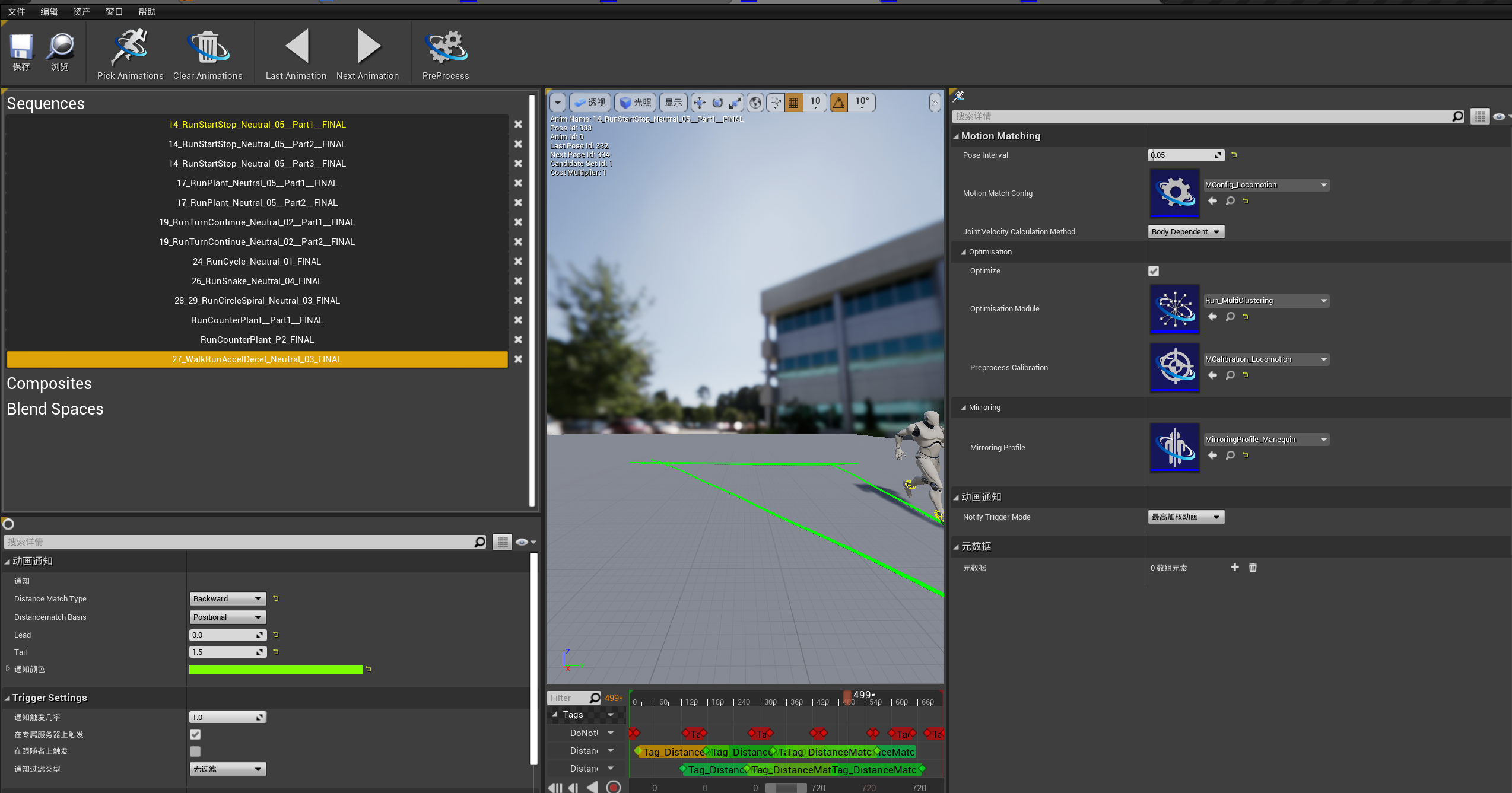

Motion Symphony资产和数据结构 资产 Motion Symphony是一个实现Motion Matching的虚幻引擎动画工具集,Motion Matching是一个基于数据的动画驱动方案,Motion Symphony中提供了一套完整的工具和工作流用于构建MM需要的动画数据集,在Motion Symphony中这个数据集叫做 Motion Data ;如下图,可见要构建Motion Data还需要几个其他的Motion Symphony提供的资产;

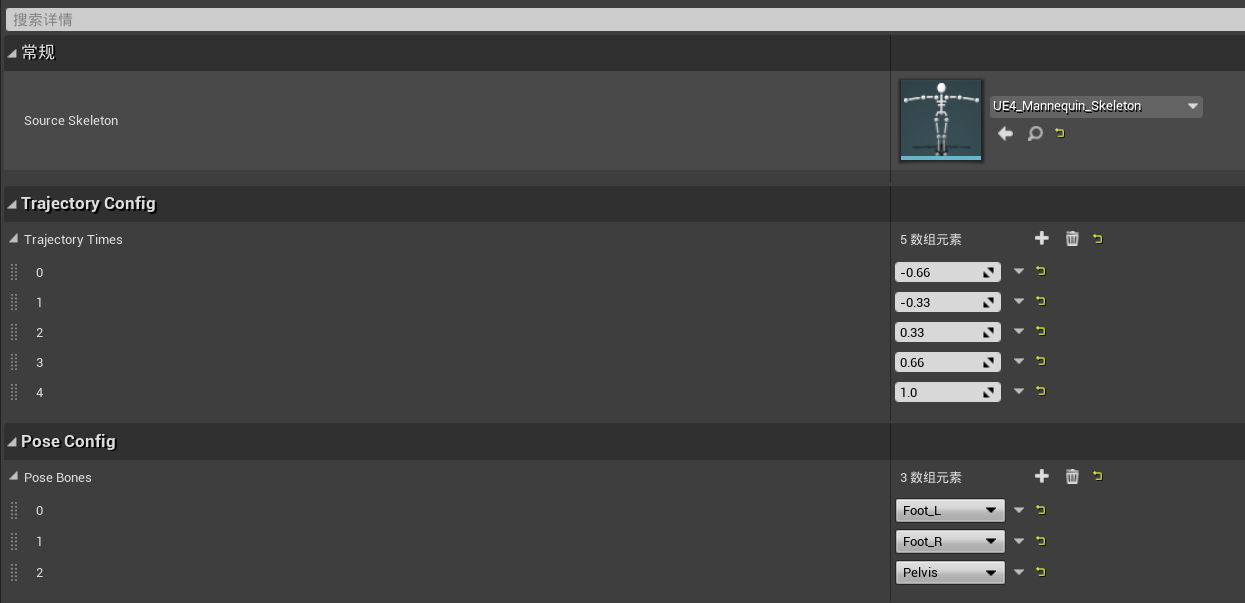

Motion Matching的原理是根据当前姿势和未来轨迹在动画数据库中寻找下一帧要播放的姿势,针对姿势匹配一般选取几个骨骼代表一个姿势,对于轨迹一般分别采样几个过去和未来的点;在Motion Symphony中这些信息被定义在资产 Motion Matching Config 中:

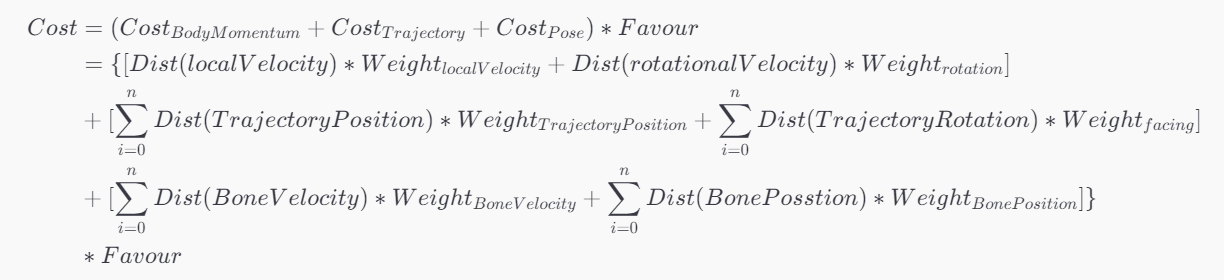

MM中的寻找,就是一个计算Cost的过程,计算最能匹配当前帧姿势和未来轨迹的动画帧作为下一个要播放的动画帧,计算Cost时需要对不同的特征设置不同的权重(表示我们更关注哪些特征),这些权重信息被定义在资产 Motion Calibration 中:

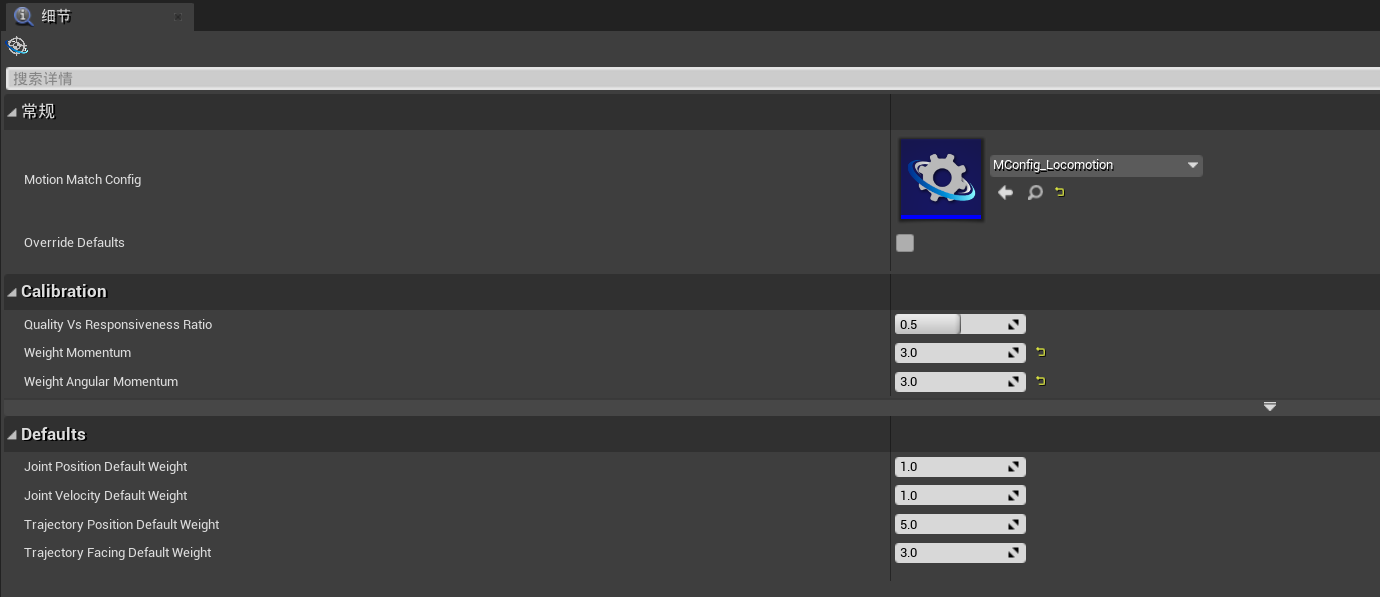

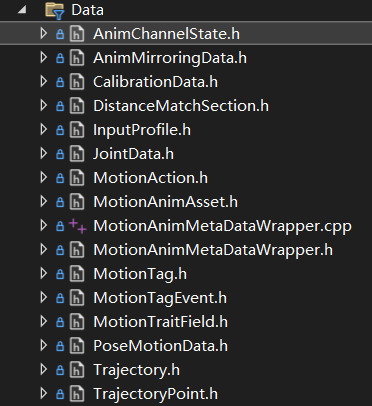

这三个资产是Motion Symphony中最重要的三个自定义资产,源码中的位置在CustomAessets 目录下:

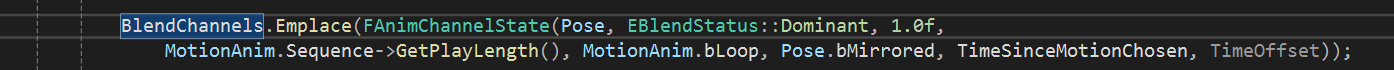

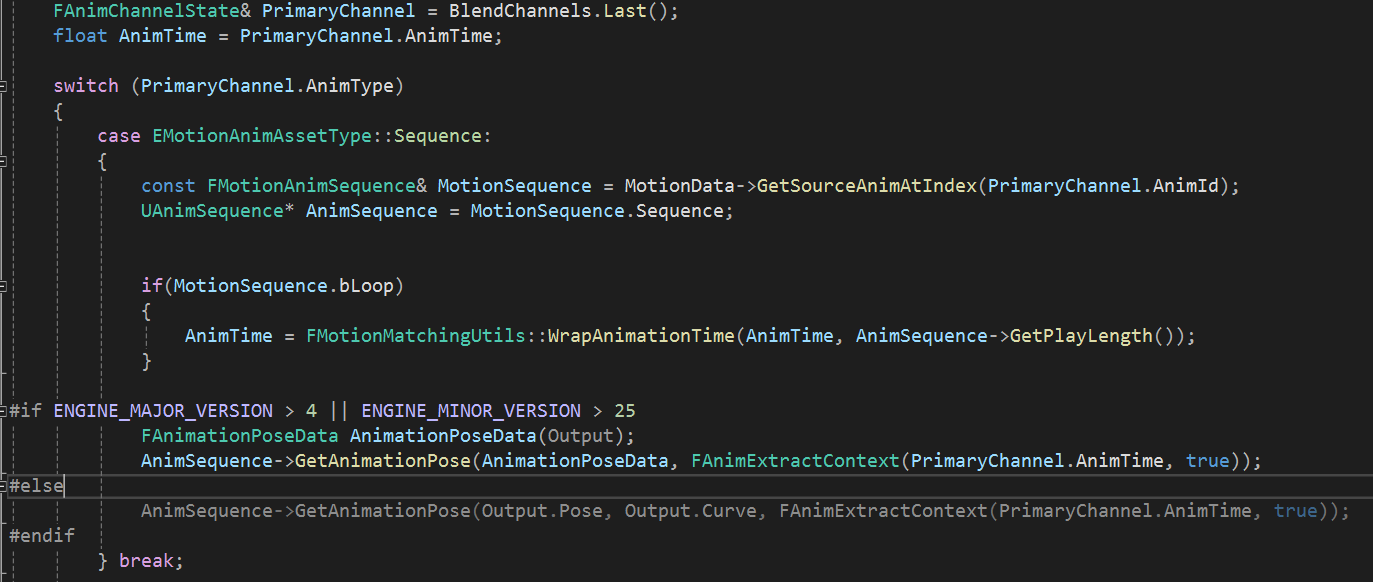

数据结构 Motion Symphony定义了许多数据结构来方便之后的动画节点计算:AnimChannelState ,这个数据结构中存储了:动画id,动画权重,动画类型(序列,混合空间等)等信息,用来方便操作(不需要每次都用AnimId去找动画长度,是否循环等等) ,AnimNode_MotionMatching中维护了一个 BlendChannels 数组,经过MM后选择了一个要播放的动画帧,就会将选择的动画帧构造成一个 AnimChannelState 并加入 BlendChannels 数组:FAnimNode_MotionMatching::Evaluate_AnyThread(FPoseContext& Output) 函数中通过BlendChannels数组选择播放的动画帧:

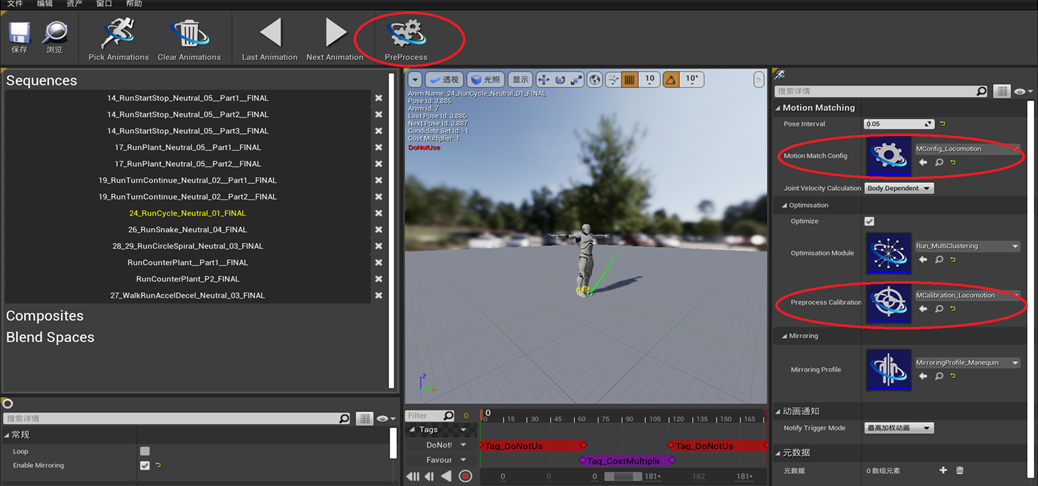

数据库构建(PreProcess)

自定义资产Motion Data就是动画数据库,Motion Symphony的思路是把不同类型的动画单独做成一个Motion Data(比如跑步动画构成一个Motion Data,走路动画构成另一个Motion Data),再用状态机实现各个状态间的过渡,这种方式可以显著提高MM的效率和准确度,因为要搜索的数据库变小了。

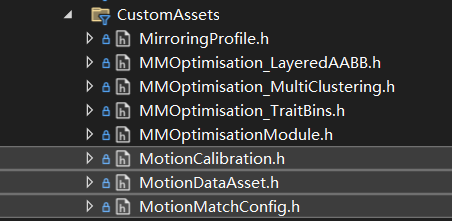

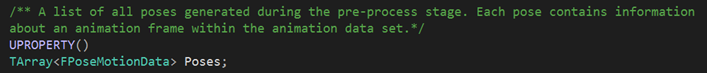

PreProcess框架 如上图,配置好一个Motion Data的源动画和Calibration和Config后,要点击PreProcess ,进行预处理,把动画数据库处理成特征数据库 ,减少存储大小;Motion Data中最重要的变量是Poses ,其中并不存储整个Pose而是存储Pose的特征,PoseId,AnimType,NextPoseId等等信息;

这一步操作由 void UMotionDataAsset::PreProcess() 函数完成(只展示核心代码):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 void UMotionDataAsset::PreProcess () Initialize ();ClearPoses ();for (int32 i = 0 ; i < SourceMotionAnims.Num (); ++i)PreProcessAnim (i, false );if (MirroringProfile != nullptr && SourceMotionAnims[i].bEnableMirroring)PreProcessAnim (i, true );GeneratePoseSequencing ();for (int32 i = 0 ; i < Poses.Num (); ++i)AddUnique (Poses[i].Traits);Empty (UsedMotionTraits.Num ());for (const FMotionTraitField& MotionTrait : UsedMotionTraits)Add (MotionTrait, FCalibrationData (this ));GenerateStandardDeviationWeights (this , MotionTrait);Initialize ();if (bOptimize && OptimisationModule)BuildOptimisationStructures (this );true ;else false ;true ;

上面源码概况下来就是先根据不同的源动画类型分别生成特征(MotionPoseData构成的Poses数组) ,再构建起这些特征的顺序关系 ,最后设置优化模块 ,这里调用的构建优化结构的函数作用是做检查,检查优化模块是否有效;

可以看出进行PreProcess的核心函数是 **void UMotionDataAsset::PreProcessAnim(const int32 SourceAnimIndex, const bool bMirror)**,下面看看这个函数的源码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 void UMotionDataAsset::PreProcessAnim (const int32 SourceAnimIndex, const bool bMirror ) const float AnimLength = Sequence->GetPlayLength ();float CurrentTime = 0.0f ;float TimeHorizon = MotionMatchConfig->TrajectoryTimes.Last ();CreateMotionTraitFieldFromArray (MotionAnim.TraitNames);if (PoseInterval < 0.01f )0.05f ;Num ();while (CurrentTime <= AnimLength)Num ();bool bDoNotUse = ((CurrentTime < TimeHorizon) && (MotionAnim.PastTrajectory == ETrajectoryPreProcessMethod::IgnoreEdges))true : false ;if (MotionAnim.bLoop)false ;float RootRotVelocity;ExtractRootVelocity (RootVelocity, RootRotVelocity, Sequence, CurrentTime, PoseInterval);if (bMirror)-1.0f ;-1.0f ;float PoseCostMultiplier = MotionAnim.CostMultiplier;FPoseMotionData (PoseId, EMotionAnimAssetType::Sequence, for (int32 i = 0 ; i < MotionMatchConfig->TrajectoryTimes.Num (); ++i)if (MotionAnim.bLoop)ExtractLoopingTrajectoryPoint (Point, Sequence, CurrentTime, MotionMatchConfig->TrajectoryTimes[i]);else float PointTime = MotionMatchConfig->TrajectoryTimes[i];if (PointTime < 0.0f )ExtractPastTrajectoryPoint (Point, Sequence, CurrentTime, PointTime,else ExtractFutureTrajectoryPoint (Point, Sequence, CurrentTime, PointTime,if (MotionAnim.bFlattenTrajectory)0.0f ;if (bMirror)-1.0f ;-1.0f ;Add (Point);const FReferenceSkeleton& RefSkeleton = Sequence->GetSkeleton ()->GetReferenceSkeleton ();for (int32 i = 0 ; i < MotionMatchConfig->PoseBones.Num (); ++i)if (bMirror)FindBoneMirror (BoneName);const int32 MirrorBoneIndex = RefSkeleton.FindBoneIndex (MirrorBoneName);ExtractJointData (JointData, Sequence, MirrorBoneIndex, CurrentTime, PoseInterval);-1.0f ;-1.0f ;else ExtractJointData (JointData, Sequence, MotionMatchConfig->PoseBones[i], CurrentTime, PoseInterval);Add (JointData);Add (NewPoseData);for (FAnimNotifyEvent& NotifyEvent : MotionAnim.Tags)Cast <UTagSection>(NotifyEvent.NotifyStateClass);if (TagSection)float TagStartTime = NotifyEvent.GetTriggerTime ();PreProcessTag (MotionAnim, this , TagStartTime, TagStartTime + NotifyEvent.Duration);RoundHalfToEven (NotifyEvent.GetTriggerTime () / PoseInterval);RoundHalfToEven ((NotifyEvent.GetTriggerTime () + NotifyEvent.Duration) / PoseInterval);Clamp (TagStartPoseId, 0 , Poses.Num ());Clamp (TagEndPoseId, 0 , Poses.Num ());GetTriggerTime ();float TagEndTime = TagStartTime + NotifyEvent.GetDuration ();for (int32 PoseIndex = TagStartPoseId; PoseIndex < TagEndPoseId; ++PoseIndex)PreProcessPose (Poses[PoseIndex], MotionAnim, this , TagStartTime, TagEndTime);continue ; Cast <UTagPoint>(NotifyEvent.Notify);if (TagPoint)float TagTime = NotifyEvent.GetTriggerTime ();RoundHalfToEven (TagTime / PoseInterval);Clamp (TagClosestPoseId, 0 , Poses.Num ());PreProcessTag (Poses[TagClosestPoseId], MotionAnim, this , TagTime);#endif

概括PreProcessAnim函数就是先提取基础信息,之后通过定义在 FMMPreProcessUtils 中的一系列工具函数提取速度信息 ,轨迹信息 和关节信息 ,最后处理Tag ;那么我们也需要了解一下具体是怎样提取这些信息的,以后如果加入我们自己关注的信息,就可以使用类似的方法提取;

FMMPreProcessUtils中的工具函数

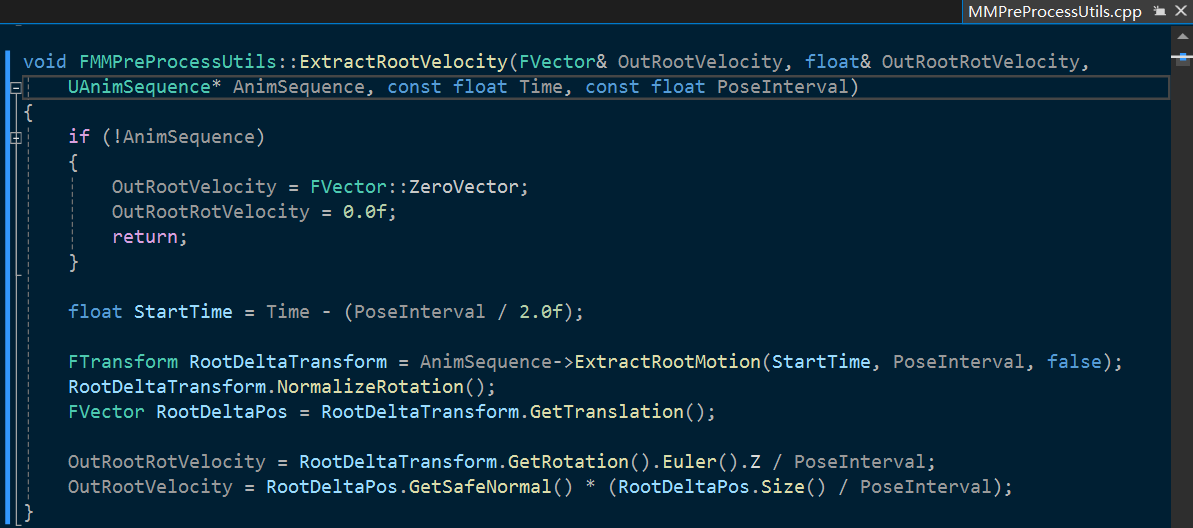

提取根位移速度和根旋转速度:调用了动画序列自带的提取根运动的函数,计算出根位移速度和根旋转速度;

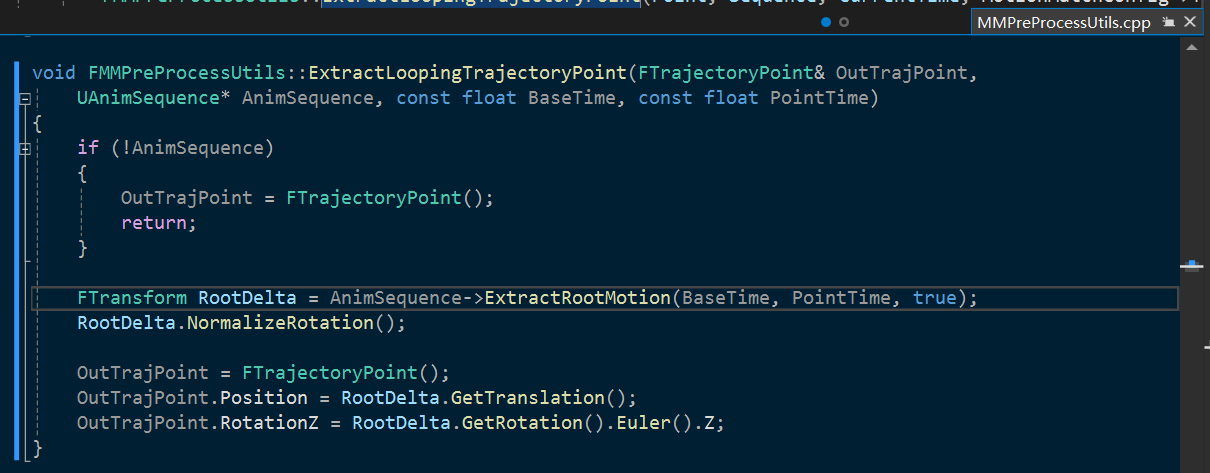

提取轨迹点位置和朝向,分为三个不同的函数:循环,提取过去的,提取未来的;

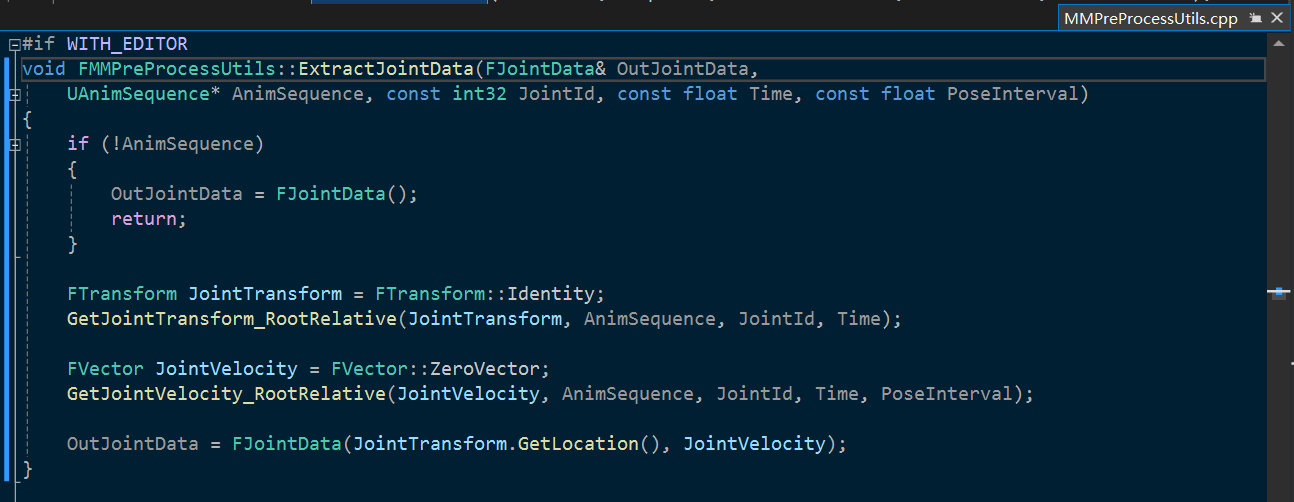

提取关节数据:

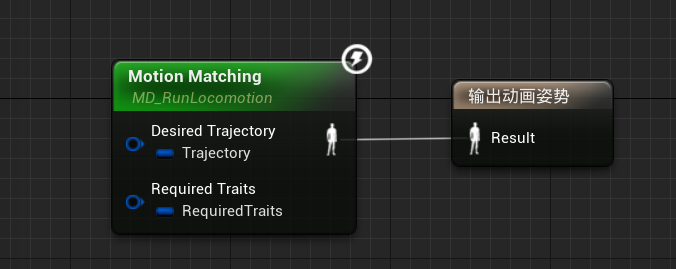

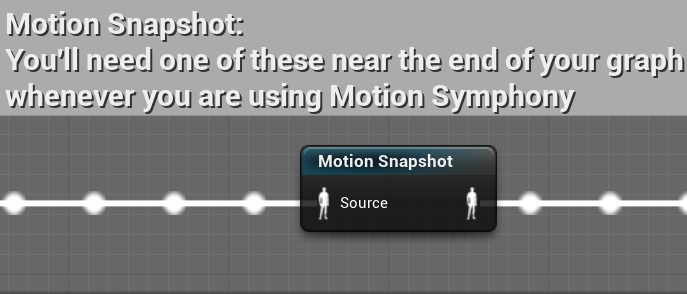

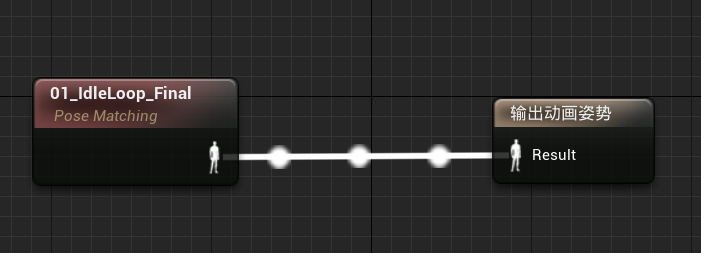

AnimNode(Motion Symphony中的动画节点) Motion Symphony提供了许多AnimNode来实现各种功能,其中最重要的是 AnimNode_MotionMatching ,AnimNode_MotionRecorder 以及 AnimNode_PoseMatching ,这三个节点在官方案例中均被使用:

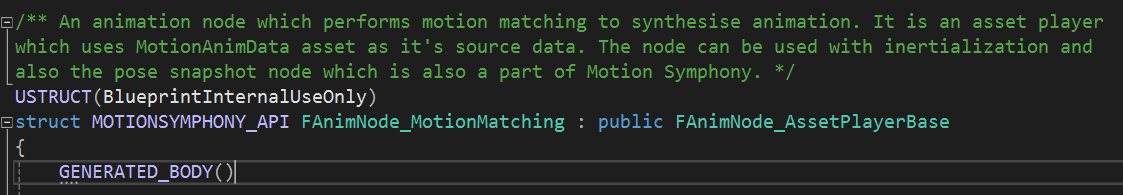

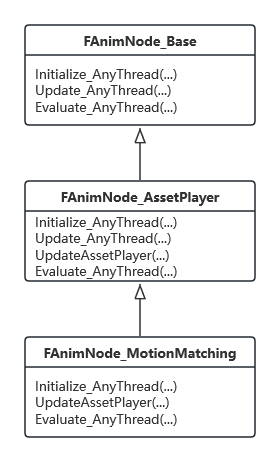

AnimNode_MotionMatching(MM节点) MM节点继承自 FAnimNode_AssetPlayerBase ,SequencePlayer和SequenceEvaluator也继承自该类,因此可以感性理解MM节点就是一个复杂的SequenceEvaluator(序列求值器),求出当前要播放哪一帧;

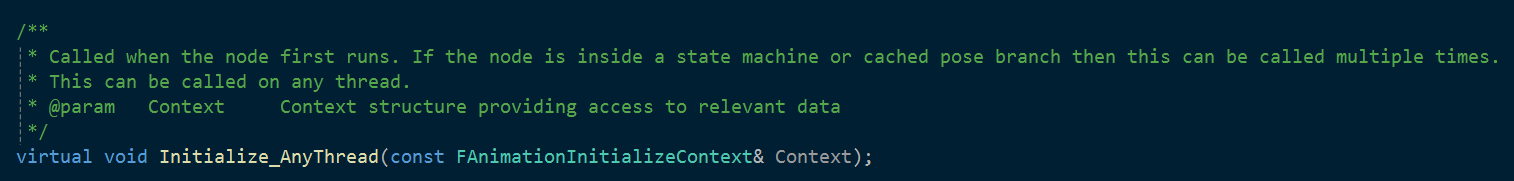

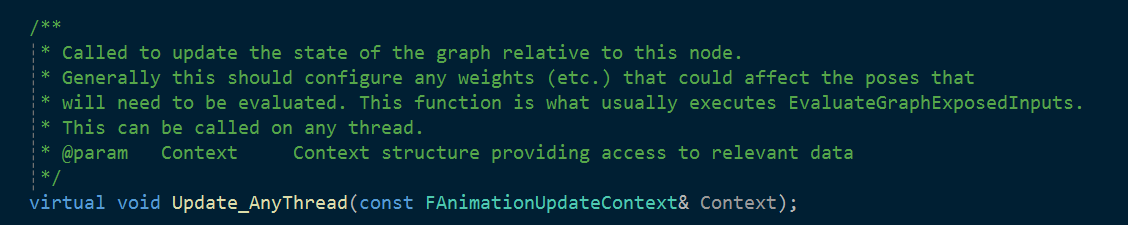

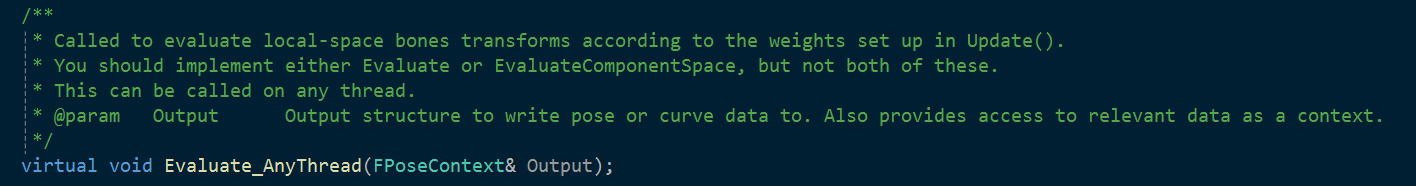

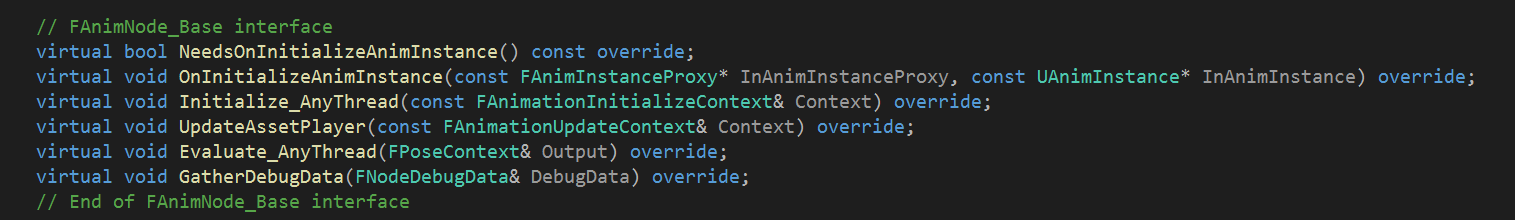

所有的AnimNode都继承自 FAnimNode_Base 类,其中有几个重要的函数 :

Initialize_AnyThread (节点第一次被调用时的初始化操作)Update_AnyThread (图表更新时调用,一般用来计算影响骨骼姿势的权重)Evaluate_AnyThread (根据update中计算出的权重估计本地空间下的骨骼变换)

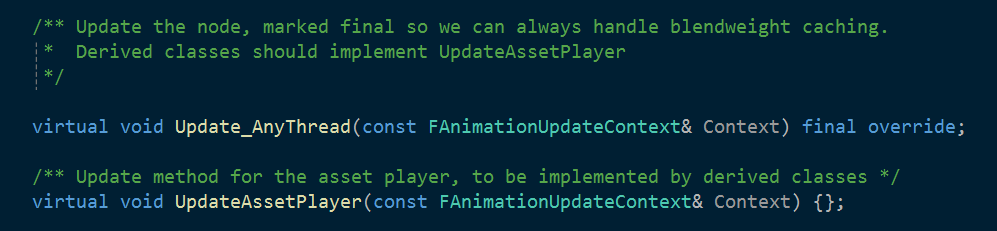

AnimNode_MotionMatching还覆写了几个其他的 FAnimNode_Base 函数,如果用到我们后面再提;Update_AnyThread 因为MM节点继承自 FAnimNode_AssetPlayerBase ,该父类将 Update_AnyThread 定义为了final,无法覆写:UpdateAssetPlayer 中,其中关键的函数及调用如下图所示:

下面我们阅读一下几个重要函数的代码逻辑:

首先Motion Matching算法是基于当前姿势去进行匹配的,因此需要得到当前姿势,这里使用 ComputeCurrentPose 函数得到当前姿势,要注意的是,Motion Symphony在实现时使用了两个变量来表示当前姿势:

CurrentInterpolatedPose :从FAnimNode_MotionRecorder中得到记录的当前姿势;CurrentChosenPoseId :通过与上次MM之间的时间间隔计算出的当前已选择的姿势id;之后如果进行MM得到的匹配结果也要赋值给该变量;

下面看看源码(省略了大量代码,只展示最核心的代码):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 void FAnimNode_MotionMatching::ComputeCurrentPose (const FCachedMotionPose& CachedMotionPose) const float PoseInterval = FMath::Max (0.01f , MotionData->PoseInterval);Last ();float TimePassed = TimeSinceMotionChosen;0 ;if (TimePassed < 0.0f ){CeilToInt (TimePassed / PoseInterval);else {FloorToInt (TimePassed / PoseInterval);LerpPoseTrajectory (CurrentInterpolatedPose, *BeforePose, *AfterPose, PoseInterpolationValue);for (int32 i = 0 ; i < PoseBoneRemap.Num (); ++i){const FCachedMotionBone& CachedMotionBone = CachedMotionPose.CachedBoneData[PoseBoneRemap[i]];FJointData (CachedMotionBone.Transform.GetLocation (), CachedMotionBone.Velocity);

清楚了当前姿势是如何得到的我们就可以来看MM算法的“主函数”UpdateMotionMatching 了,大致流程就是先得到当前姿势,之后进行SchedulePoseSearch ;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 void FAnimNode_MotionMatching::UpdateMotionMatching (const float DeltaTime, const FAnimationUpdateContext& Context) false ;GetAncestor <FAnimNode_MotionRecorder>();if (MotionRecorderNode){ ComputeCurrentPose (MotionRecorderNode->GetMotionPose ());else {ComputeCurrentPose ();if (CurrentInterpolatedPose.bDoNotUse){true ;if (PastTrajectoryMode == EPastTrajectoryMode::CopyFromCurrentPose){for (int32 i = 0 ; i < MMConfig->TrajectoryTimes.Num (); ++i){if (MMConfig->TrajectoryTimes[i] > 0.0f ){ break ;if (TimeSinceMotionUpdate >= UpdateInterval || bForcePoseSearch){0.0f ;SchedulePoseSearch (DeltaTime, Context);

姿势匹配函数 SchedulePoseSearch :首先根据当前已选择的姿势得到下一帧姿势(我们更希望动画连续,也就是尽可能顺着当前动画播放,会在计算Cost更偏向下一帧姿势,使下一帧姿势的Cost更小),开始计算Cost,得到Cost最小的姿势,判断该姿势是否是 FAnimNode_MotionRecorder 记录的当前姿势(CurrentInterpolatedPose),以及是否是当前已选择的姿势(CurrentChosenPoseId),都不是就过渡到该Cost最小的姿势;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 void FAnimNode_MotionMatching::SchedulePoseSearch (float DeltaTime, const FAnimationUpdateContext& Context) switch (PoseMatchMethod){ case EPoseMatchMethod::Optimized: { LowestPoseId = GetLowestCostPoseId (NextPose); } break ;case EPoseMatchMethod::Linear: { LowestPoseId = GetLowestCostPoseId_Linear (NextPose); } break ;bool bWinnerAtSameLocation = BestPose.AnimId == CurrentInterpolatedPose.AnimId &&Abs (BestPose.Time - CurrentInterpolatedPose.Time) < 0.25f DistSquared (BestPose.BlendSpacePosition, CurrentInterpolatedPose.BlendSpacePosition) < 1.0f ;if (!bWinnerAtSameLocation){Abs (BestPose.Time - ChosenPose.Time) < 0.25f DistSquared (BestPose.BlendSpacePosition, ChosenPose.BlendSpacePosition) < 1.0f ;if (!bWinnerAtSameLocation){TransitionToPose (BestPose.PoseId, Context);

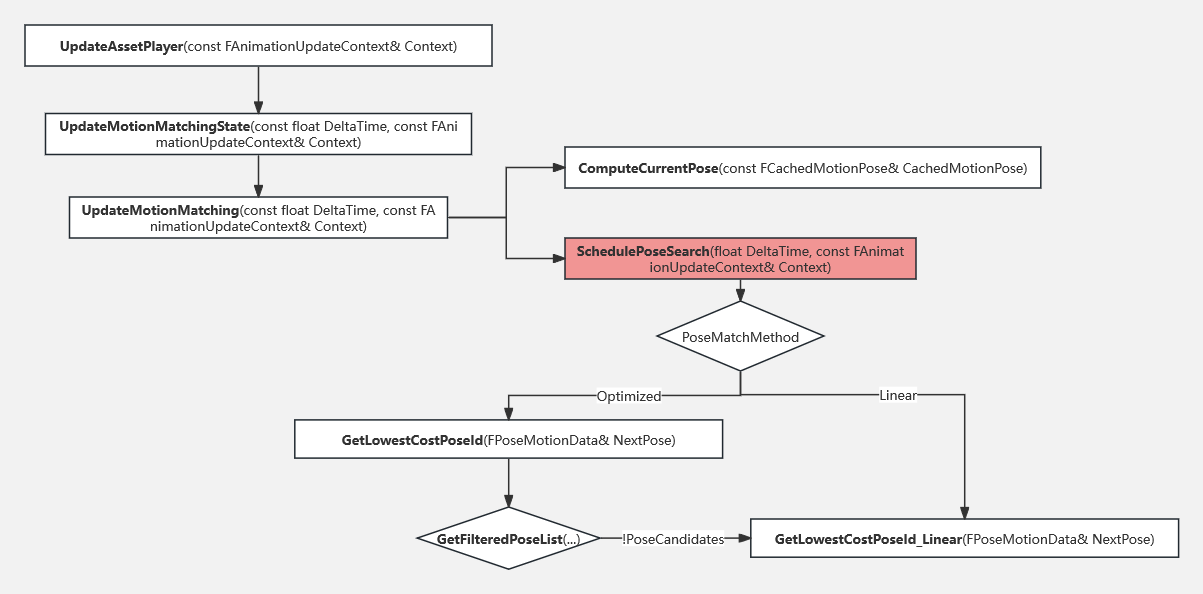

接下来,我们就可以看MM算法的核心,匹配算法的实现了,在 GetLowestCostPoseId 和 GetLowestCostPoseId_Linear 这两个函数中,他们区别不大,唯一的区别就是是否对数据库进行了筛选,因此我们只看 GetLowestCostPoseId 即可,同样省略了一些非核心代码;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 int32 FAnimNode_MotionMatching::GetLowestCostPoseId (FPoseMotionData& NextPose) GetFilteredPoseList (CurrentInterpolatedPose, RequiredTraits, FinalCalibration);if (!PoseCandidates)return GetLowestCostPoseId_Linear (NextPose);0 ;float LowestCost = 10000000.0f ;for (FPoseMotionData& Pose : *PoseCandidates)float Cost = FVector::DistSquared (CurrentInterpolatedPose.LocalVelocity, Pose.LocalVelocity) * FinalCalibration.Weight_Momentum;Abs (CurrentInterpolatedPose.RotationalVelocity - Pose.RotationalVelocity)const int32 TrajectoryIterations = FMath::Min (DesiredTrajectory.TrajectoryPoints.Num (), FinalCalibration.TrajectoryWeights.Num ());for (int32 i = 0 ; i < TrajectoryIterations; ++i)const FTrajectoryWeightSet WeightSet = FinalCalibration.TrajectoryWeights[i];const FTrajectoryPoint CurrentPoint = DesiredTrajectory.TrajectoryPoints[i];const FTrajectoryPoint CandidatePoint = Pose.Trajectory[i];DistSquared (CandidatePoint.Position, CurrentPoint.Position) * WeightSet.Weight_Pos;Abs (FMath::FindDeltaAngleDegrees (CandidatePoint.RotationZ, CurrentPoint.RotationZ)) * WeightSet.Weight_Facing;for (int32 i = 0 ; i < CurrentInterpolatedPose.JointData.Num (); ++i)const FJointWeightSet WeightSet = FinalCalibration.PoseJointWeights[i];const FJointData CurrentJoint = CurrentInterpolatedPose.JointData[i];const FJointData CandidateJoint = Pose.JointData[i];DistSquared (CurrentJoint.Velocity, CandidateJoint.Velocity) * WeightSet.Weight_Vel;DistSquared (CurrentJoint.Position, CandidateJoint.Position) * WeightSet.Weight_Pos;if (bFavourCurrentPose && Pose.PoseId == NextPose.PoseId)if (Cost < LowestCost)return LowestPoseId;

匹配算法 看完GetLowestCostPoseId 函数,我们可以就总结出Motion Symphony使用的匹配算法了!

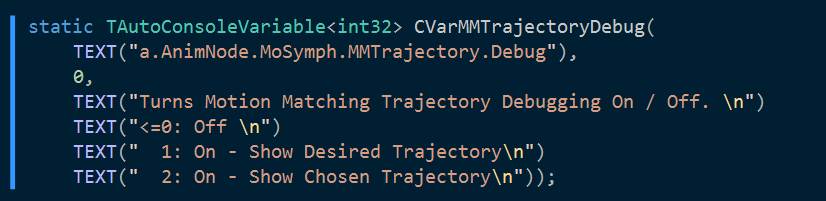

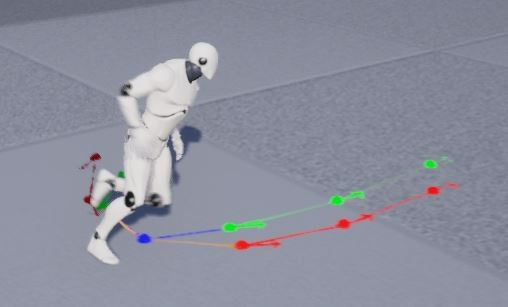

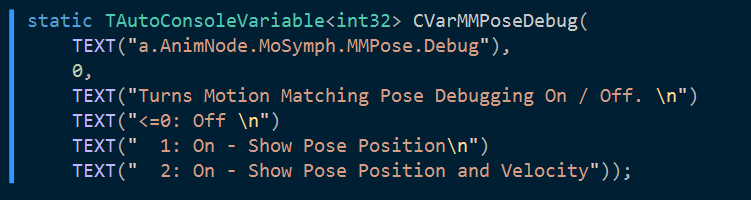

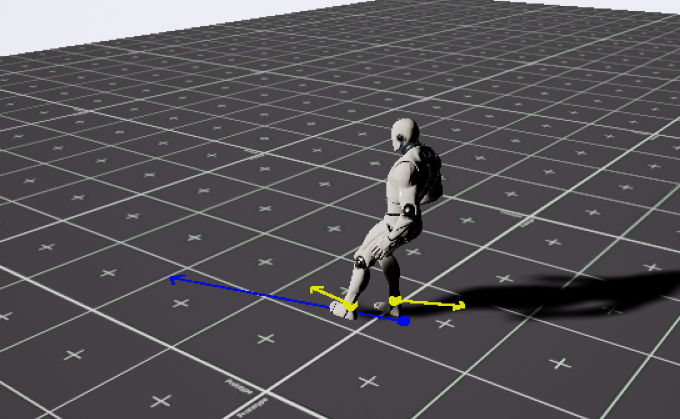

Debug工具 Motion Symphony提供了一系列Debug工具,大多是通过命令行开启后在视口中打印出相关数据或者绘制出相应轨迹。下面看几个常用的Debug工具如何开启以及如何在代码中如何实现;

Debugging the Trajectory(轨迹线绘制)

轨迹线绘制实现在UpdateAssetPlayer 函数中,如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 const int32 TrajDebugLevel = CVarMMTrajectoryDebug.GetValueOnAnyThread ();if (TrajDebugLevel > 0 )if (TrajDebugLevel == 2 )DrawChosenTrajectoryDebug (Context.AnimInstanceProxy);DrawTrajectoryDebug (Context.AnimInstanceProxy);

Debugging the Pose(绘制Pose位置和速度)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 int32 PoseDebugLevel = CVarMMPoseDebug.GetValueOnAnyThread ();if (PoseDebugLevel > 0 )DrawChosenPoseDebug (Context.AnimInstanceProxy, PoseDebugLevel > 1 );GetValueOnAnyThread ();if (AnimDebugLevel > 0 )DrawAnimDebug (Context.AnimInstanceProxy);

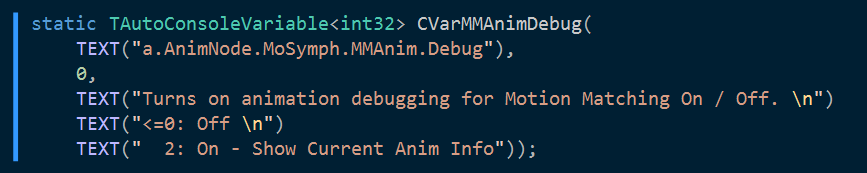

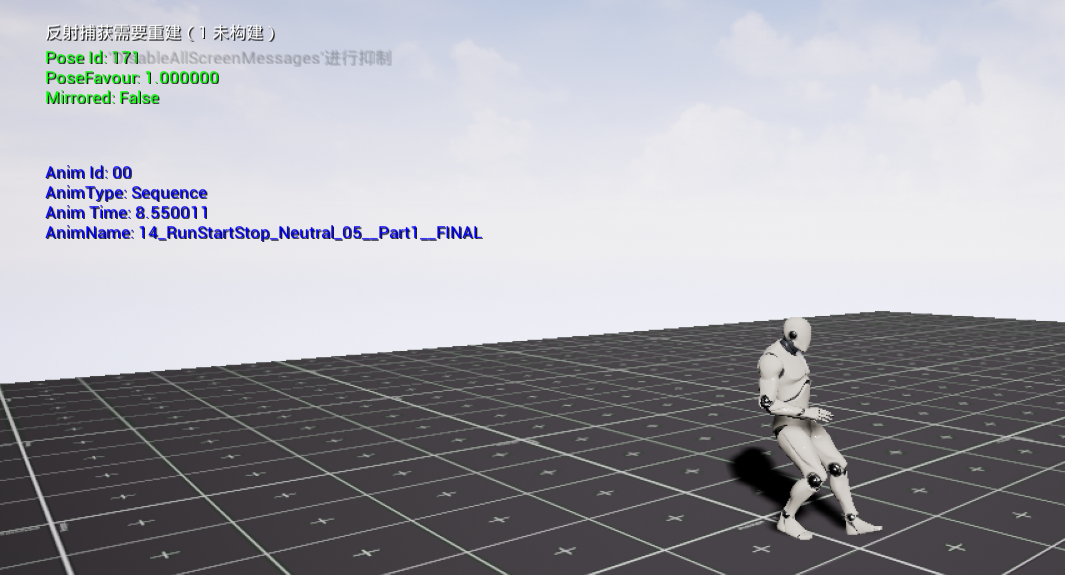

Animation Info Debugging(当前姿势的相关信息)

1 2 3 4 5 6 7 GetValueOnAnyThread ();if (AnimDebugLevel > 0 )DrawAnimDebug (Context.AnimInstanceProxy);

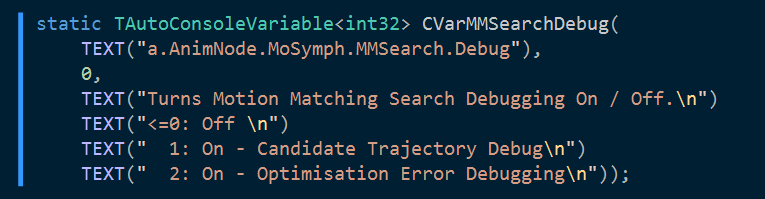

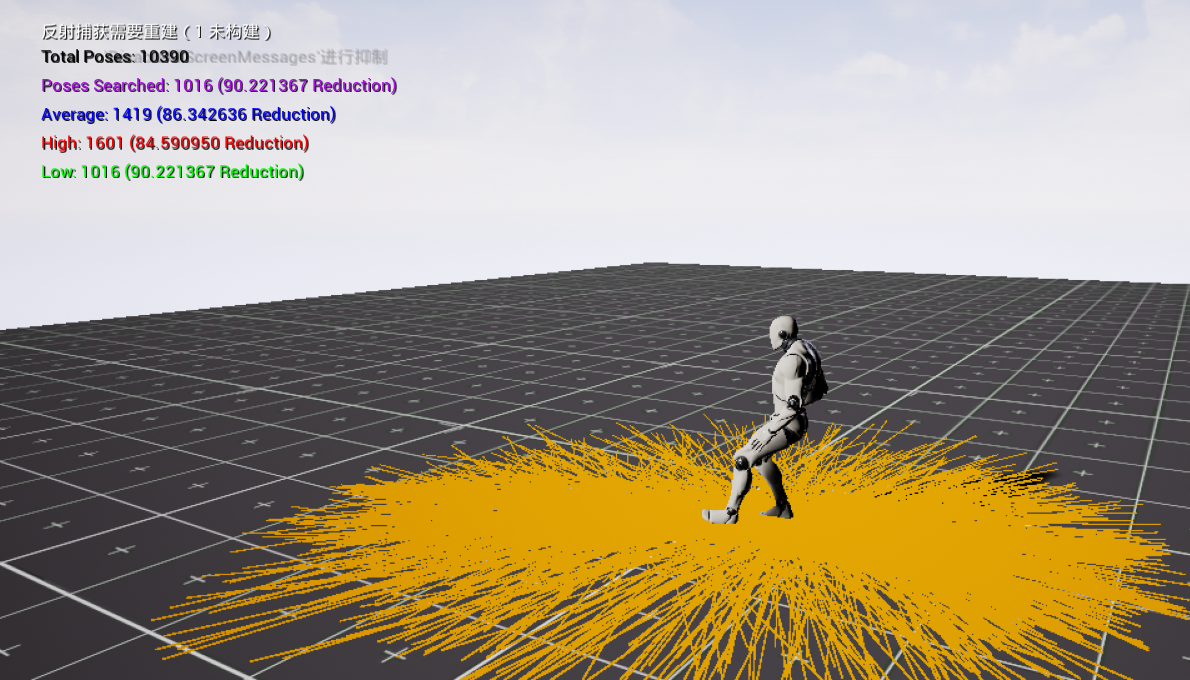

Search / Optimization Debugging 显示候选姿势的个数,并绘制所有候选姿势的轨迹;也可以查看使用了优化手段后与使用线性搜索之间的误差;

Cost Debugging 许多其他的MM方案会提供Cost的debug工具来显示所有姿势的Cost值,Motion Symphony没有提供类似的工具,无法直接查看每个候选姿势的Cost是多少;之后可以仿照上面的其他Debug工具的写法添加一个。

优化策略 Motion Symphony的优化策略可以分为两类,一类是处理数据集,为数据集中的每个姿势定义一个候选姿势集,减少要搜索的数据数量;另一类是在逻辑中提前结束搜索;

处理数据集的优化策略都依靠在Motion Data中配置从而在预处理Motion Data时实现候选姿势集的构建,因此定义了几个资产来表示不同的优化策略,分别是MMOptimisation_MultiClustering ,MMOptimisation_TraitsBin 和 MMOptimisation_LayeredAABB (在编辑器中没找到,应该是功能还没完善),他们均继承自类 UMMOptimisationModule ;

K-means Clustering K-means是一种经典的机器学习分类算法,核心目标是将给定的数据集划分成K个簇(K是超参),并给出每个样本数据对应的中心点。轨迹 分为K个簇,在匹配过程中只搜索与当前姿势在同一个簇中的姿势。

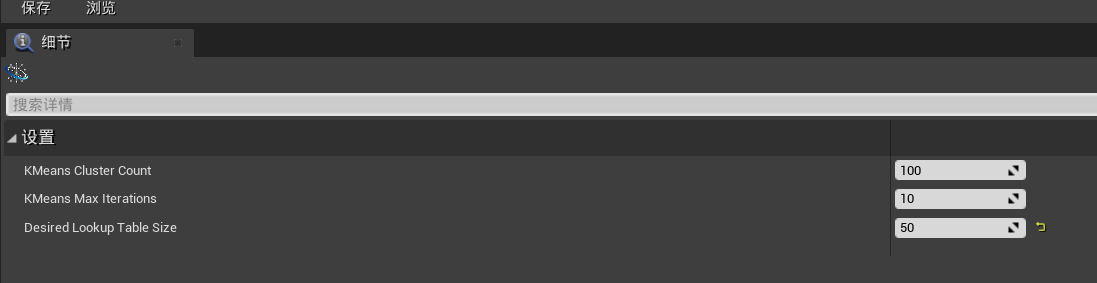

Motion Symphony定义了一种资产来实现K-means算法:MMOptimisation_MultiClustering ;其中定义了K-means算法的分簇数,最大迭代次数和期望的查询表的大小,该表中存放一个包含候选姿势集的数组,Desired Lookup Table Size就是这个数组的大小,也是最终姿势数据库会被划分的簇数;BuildOptimisationStructures ,该函数是父类 UMMOptimisationModule 中定义的虚函数;

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 void UMMOptimisation_MultiClustering::BuildOptimisationStructures (UMotionDataAsset* InMotionDataAsset) BuildOptimisationStructures (InMotionDataAsset);for (FPoseMotionData& Pose : InMotionDataAsset->Poses)FindOrAdd (Pose.Traits);Add (FPoseMotionData (Pose));for (auto & TraitPoseSet : PoseBins)FCalibrationData ();GenerateFinalWeights (InMotionDataAsset->PreprocessCalibration, #if WITH_EDITORONLY_DATA Clear ();#else FKMeansClusteringSet ();#endif BeginClustering (TraitPoseSet.Value, FinalPreProcessCalibration, KMeansClusterCount, KMeansMaxIterations, true );FindOrAdd (TraitPoseSet.Key);Process (TraitPoseSet.Value, KMeansClusteringSet, FinalPreProcessCalibration,for (int32 i = 0 ; i < PoseLookupTable.CandidateSets.Num (); ++i)for (FPoseMotionData& Pose : CandidateSet.PoseCandidates)

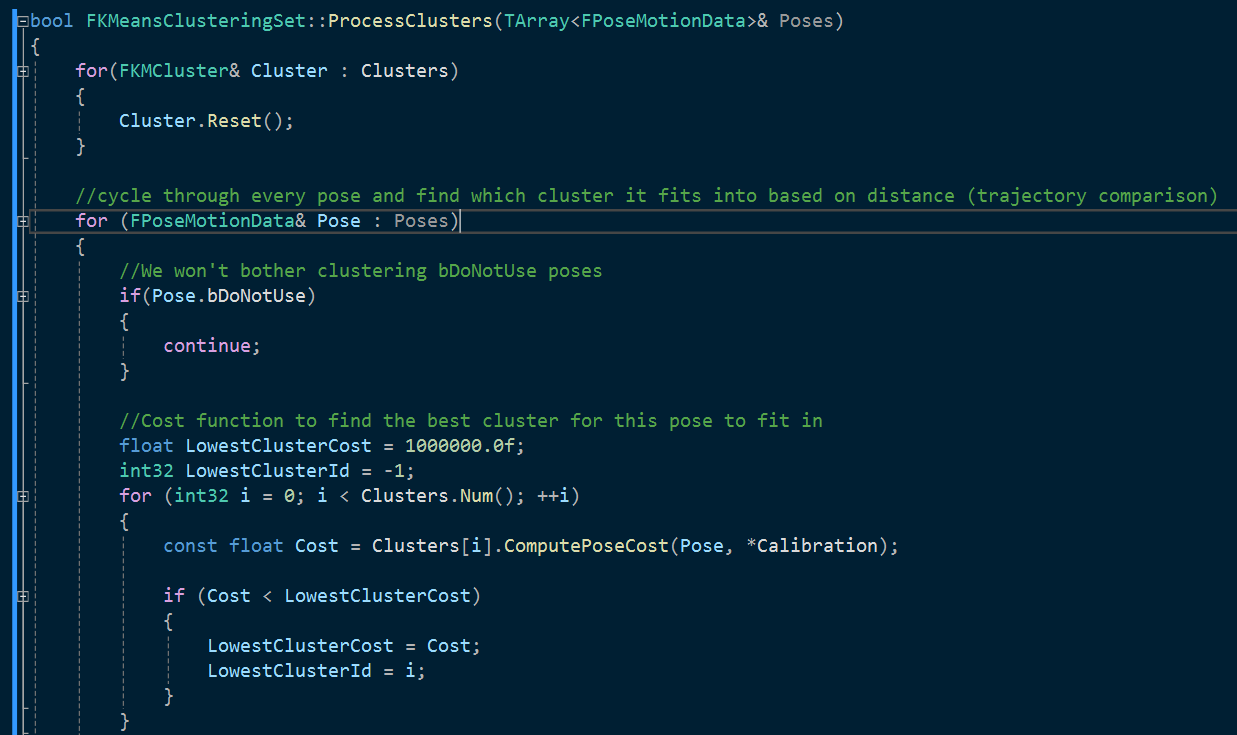

BeginClustering 函数调用了 bool FKMeansClusteringSet::ProcessClusters(TArray& Poses)函数,其中可见划分方式是按照轨迹距离划分,也就是每个姿势的候选匹配姿势集中都是与当前姿势轨迹接近的姿势 ;

TraitsBin 只在需要的Traits里搜索;例如在MM节点里设置需要的Traits为 Walk,那么就只会在Tag被设置为Walk的姿势中搜索;DoNotUse Tag原理相同;

1 2 3 4 5 6 7 8 9 10 TArray<FPoseMotionData>* UMMOptimisation_TraitBins::GetFilteredPoseList (const FPoseMotionData& CurrentPose, const FMotionTraitField RequiredTraits, const FCalibrationData& FinalCalibration) if (PoseBins.Contains (RequiredTraits))return &PoseBins[RequiredTraits].Poses;return nullptr ;

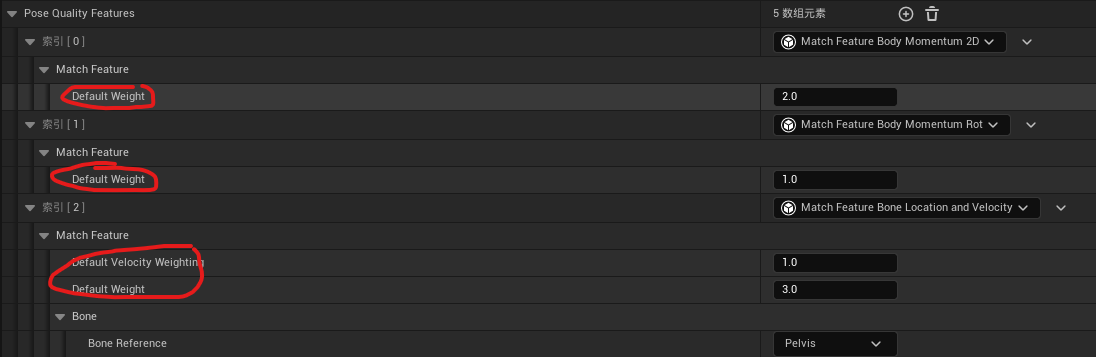

Motion Symphony 2.0 Motion Calibration变为optional 在2.0版本中,Motion Calibration变成了可选择的,不是必须的,之前1.0中需要在Motion Calibration中配置权重,而在2.0中权重被定义在Motion Config中;

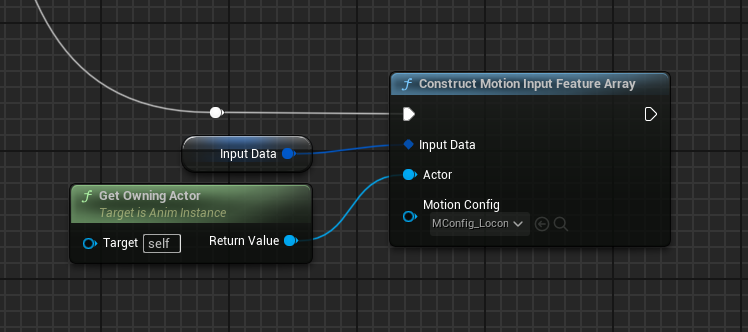

输入数据更加通用 在1.0中,mm需要输入一个trajectory ,而在2.0中这个变量变成了更通用的input data ,称为“FMotionMatchingInputData”,本质上是一个浮点数组的包装器,其中包含来自所有“输入响应”类型匹配功能的所有数据;Construct Motion Input Feature Array 填充Input Data。

函数中会根据MotionConfig中选择的特征数据,调用各特征子类的SourceInputData 函数:

1 2 3 4 5 6 7 8 9 10 11 12 13 int32 FeatureOffset = 0 ;for (TObjectPtr<UMatchFeatureBase> MatchFeature : MotionConfig->InputResponseFeatures)if (MatchFeature && MatchFeature->IsSetupValid ())SourceInputData (InputData.DesiredInputArray, FeatureOffset, Actor);Size ();else UE_LOG (LogTemp, Error, TEXT ("ERROR: 'ConstructMotionInputFeatureArray' node - Match feature has an invalid setup and cannot be processed." ))

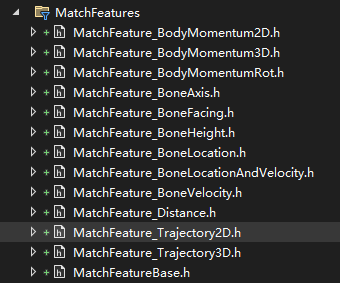

如下图,有这些特征类,均继承自MatchFeatureBase类,都重载SourceInputData函数实现对InputData填充不同的数据:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 if (UTrajectoryGenerator_Base* TrajectoryGenerator = InActor->GetComponentByClass <UTrajectoryGenerator_Base>())const FTrajectory& Trajectory = TrajectoryGenerator->GetCurrentTrajectory ();const int32 Iterations = FMath::Min (TrajectoryTiming.Num (), Trajectory.TrajectoryPoints.Num ());for (int32 i = 0 ; i < Iterations; ++i)const FTrajectoryPoint& TrajectoryPoint = Trajectory.TrajectoryPoints[i];FQuat (FVector::UpVector,DegreesToRadians (TrajectoryPoint.RotationZ)) * FVector::ForwardVector;GetSafeNormal () * 100.0f ;const int32 PointOffset = FeatureOffset + (i * 4.0f );if (PointOffset + 3 >= OutFeatureArray.Num ())UE_LOG (LogTemp, Error, TEXT ("UMatchFeature_Trajectory2D: SourceInputData(...) - Feature does not fit in FeatureArray" ));return ;1 ] = TrajectoryPoint.Position.Y;2 ] = RotationVector.X;3 ] = RotationVector.Y;

不需要配置优化策略 1.0中可以配置优化模块,例如cluster或AABB等,在2.0中废弃了这一功能,默认使用AABB进行搜索优化;

不能显式计算各个特征Cost 在2.0中由于特征可选择的更多,因此不能确定选择了那些特征,在计算cost时也就不能显示得到如轨迹cost,姿势cost等信息,而是统一对特征数组进行计算;